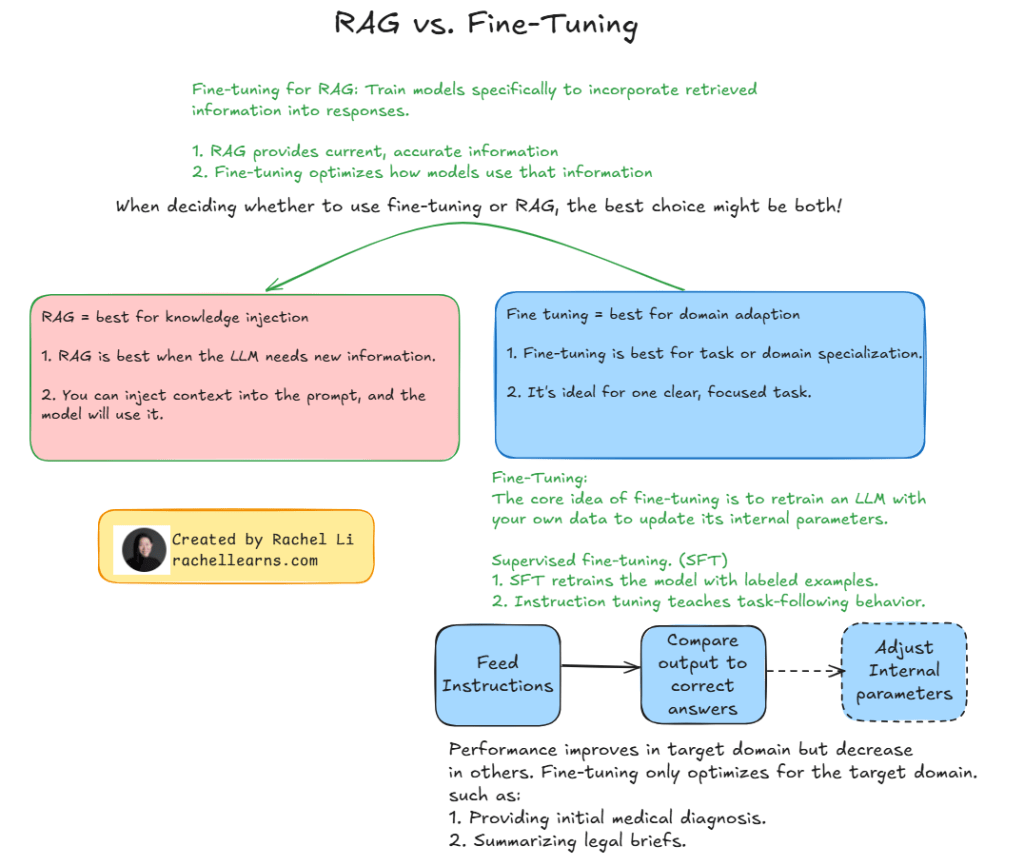

When it comes to adapting LLMs, it’s not always RAG or fine-tuning — sometimes, the best choice is both.

🔍 RAG (Retrieval-Augmented Generation)

Best for knowledge injection — perfect when your LLM needs access to current or external information.

You can dynamically inject relevant context, and the model will use it effectively.

🎯 Fine-Tuning

Best for domain adaptation — ideal for tasks that require deep specialization or structured responses.

Fine-tuning updates the model’s internal parameters to focus on a narrow domain.

📚 Examples:

- 🏥 RAG for staying updated with the latest medical knowledge.

- ⚖️ Fine-tuning for summarizing legal documents with precision.

✨ Tip: Use RAG to expand context, and fine-tuning to refine task behavior.

Created by Rachel Li | rachellearns.com

#AI #LLM #RAG #FineTuning #ML #AgenticAI #RachelLearnsAI #MachineLearning #OpenAI #RetrievalAugmentedGeneration

Leave a comment