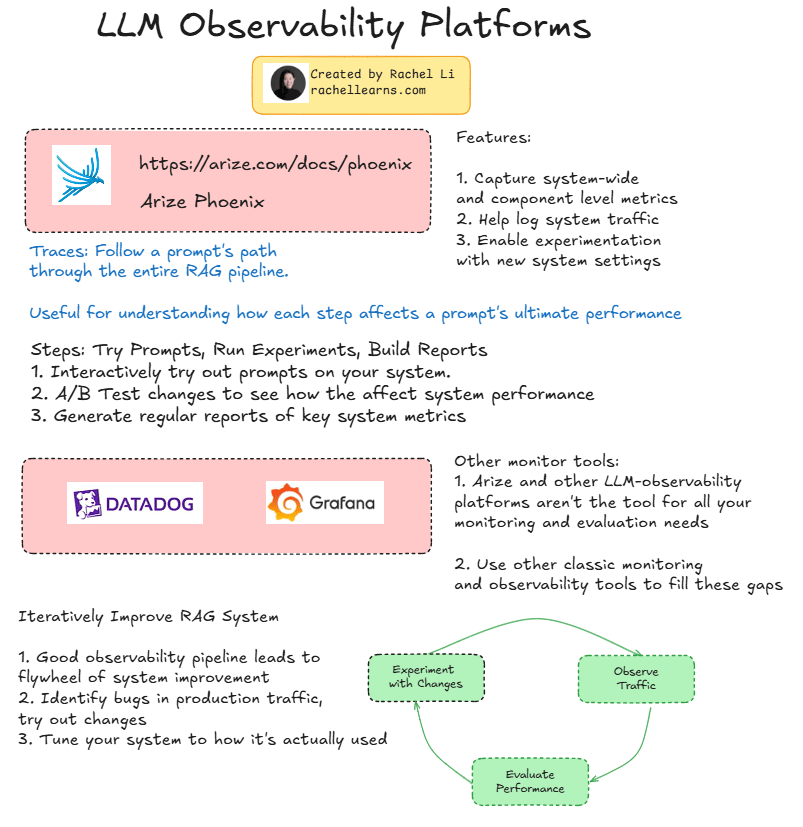

When working with Retrieval-Augmented Generation (RAG), visibility into each step of the pipeline is essential. That’s where LLM observability tools like Arize Phoenix come in.

🔍 What can Arize do?

- Trace prompts through your entire RAG pipeline

- Capture both system-wide and component-level metrics

- Log traffic and experiment with new system configurations

📊 Why it’s valuable:

- See how prompt changes affect final performance

- A/B test system tweaks and monitor impact

- Build reports to track system evolution

🔄 It’s a flywheel: Better observability → Better experiments → Better performance

🔧 Of course, no single tool solves everything. Tools like Datadog and Grafana remain crucial for full-stack observability.

💡 Tip: Iteratively try prompts, run experiments, and refine your system based on real-world usage.

Let’s build smarter, more transparent AI systems.

🧠 Created by Rachel Li | rachellearns.com

#LLM #Observability #RAG #AIEngineering #MLOps #ArizePhoenix #PromptEngineering #Datadog #Grafana #LLMops #AI

Leave a comment